Make your friend happy by getting the Hideeni doll or get it for yourself if you have multiple accounts!

* Open Cheat Engine;

* Tick hex & copy 000026840FE44589

* Change value to 8 bytes

* Tick “also scan read-only memory”;

* First Scan;

* Right click, disassemble this memory region;

* You will get an address & below it an address that starts with je xxxxxx

example Je c0bff1234;

* Right click and choose “replace with code the does nothing” (or hit delete on your keyboard)

* Voila Hideeni is chasing you;)

Now your friends (or you from another account can claim gift)

Credits: 2010 hacks games

Pet Society: Find Hideeni!

1/16/2010 10:53:00 PM

1/16/2010 10:53:00 PM

Ee Blog

Ee Blog

Posted in

Cheats,

Pet Society

Posted in

Cheats,

Pet Society

Mafia Wars: Special Energy Pack for Mafia Wars Toolbar

1/16/2010 10:48:00 PM

1/16/2010 10:48:00 PM

Ee Blog

Ee Blog

- For normal energy pack, we have to wait until 23 hour and get our energy increase to 125% of max energy.

- For Special energy pack, we have to wait for 8 hour and get our energy increase to 25% of max energy. And it's continue giving special energy pack when we have used it.

To download toolbar the link is Mafia Wars Toolbar

Posted in

Mafia Wars,

News,

Tips and Tricks

Posted in

Mafia Wars,

News,

Tips and Tricks

Year 2038 Problem: Unix Millennium bug, or Y2K38

1/11/2010 08:52:00 PM

1/11/2010 08:52:00 PM

Ee Blog

Ee Blog

What's wrong with Unix systems in the year 2038?

The typical Unix timestamp (time_t) stores a date and time as a 32-bit signed integer number representing, roughly speaking, the number of seconds since January 1, 1970; in 2038, this number will roll over (exceed 32 bits), causing the Year 2038 problem (also known as Unix Millennium bug, or Y2K38). To solve this problem, many systems and languages have switched to a 64-bit version, or supplied alternatives which are 64-bit.

What is the year 2038 bug?

In the first month of the year 2038 C.E. many computers will encounter a date-related bug in their operating systems and/or in the applications they run. This can result in incorrect and grossly inaccurate dates being reported by the operating system and/or applications. The effect of this bug is hard to predict, because many applications are not prepared for the resulting "skip" in reported time - anywhere from 1901 to a "broken record" repeat of the reported time at the second the bug occurs. Also, leap seconds may make some small adjustment to the actual time the bug expresses itself. I expect this bug to cause serious problems on many platforms, especially Unix and Unix-like platforms, because these systems will "run out of time". Starting at GMT 03:14:07, Tuesday, January 19, 2038, I fully expect to see lots of systems around the world breaking magnificently: satellites falling out of orbit, massive power outages (like the 2003 North American blackout), hospital life support system failures, phone system interruptions (including 911 emergency services), banking system crashes, etc. One second after this critical second, many of these systems will have wildly inaccurate date settings, producing all kinds of unpredictable consequences. In short, many of the dire predictions for the year 2000 are much more likely to actually occur in the year 2038! Consider the year 2000 just a dry run. In case you think we can sit on this issue for another 30 years before addressing it, consider that reports of temporal echoes of the 2038 problem are already starting to appear in future date calculations for mortgages and vital statistics! Just wait til January 19, 2008, when 30-year mortgages will start to be calculated.

What causes it?

What makes January 19, 2038 a special day? Unix and Unix-like operating systems do not calculate time in the Gregorian calendar, they simply count time in seconds since their arbitrary "birthday", GMT 00:00:00, Thursday, January 1, 1970 C.E. The industry-wide practice is to use a 32-bit variable for this number (32-bit signed time_t). Imagine an odometer with 32 wheels, each marked to count from 0 and 1 (for base-2 counting), with the end wheel used to indicate a positive or negative integer. The largest possible value for this integer is 2**31-1 = 2,147,483,647 (over two billion). 2,147,483,647 seconds after Unix's birthday corresponds to GMT 03:14:07, Tuesday, January 19, 2038. One second later, many Unix systems will revert to their birth date (like an odometer rollover from 999999 to 000000). Because the end bit indicating positive/negative integer may flip over, some systems may revert the date to 20:45:52, Friday, December 13, 1901 (which corresponds to GMT 00:00:00 Thursday, January 1, 1970 minus 2**31 seconds). Hence the media may nickname this the "Friday the Thirteenth Bug". I have read unconfirmed reports that the rollover could even result in a system time of December 32, 1969 on some legacy systems!

What operating systems, platforms, and applications are affected by it?

A quick check with the following Perl script may help determine if your computers will have problems (this requires Perl to be installed on your system, of course):

#!/usr/bin/perl## I've seen a few versions of this algorithm# online, I don't know who to credit. I assume# this code to by GPL unless proven otherwise.# Comments provided by William Porquet, February 2004.# You may need to change the line above to # reflect the location of your Perl binary# (e.g. "#!/usr/local/bin/perl").# Also change this file's name to '2038.pl'.# Don't forget to make this file +x with "chmod".# On Linux, you can run this from a command line like this:# ./2038.pluse POSIX;# Use POSIX (Portable Operating System Interface),# a set of standard operating system interfaces.$ENV{'TZ'} = "GMT";# Set the Time Zone to GMT (Greenwich Mean Time) for date calculations.for ($clock = 2147483641; $clock < 2147483651; $clock++){ print ctime($clock);}# Count up in seconds of Epoch time just before and after the critical event.# Print out the corresponding date in Gregorian calendar for each result.# Are the date and time outputs correct after the critical event second?I have only seen a mere handful of operating systems that appear to be unaffected by the year 2038 bug so far. For example, the output of this script on Debian GNU/Linux (kernel 2.4.22):

Tue Jan 19 03:14:01 2038Tue Jan 19 03:14:02 2038Tue Jan 19 03:14:03 2038Tue Jan 19 03:14:04 2038Tue Jan 19 03:14:05 2038Tue Jan 19 03:14:06 2038Tue Jan 19 03:14:07 2038Fri Dec 13 20:45:52 1901Fri Dec 13 20:45:52 1901Fri Dec 13 20:45:52 1901Windows 2000 Professional with ActivePerl 5.8.3.809 fails in such a manner that it stops displaying the date after the critical second:

C:\>perl 2038.plMon Jan 18 22:14:01 2038Mon Jan 18 22:14:02 2038Mon Jan 18 22:14:03 2038Mon Jan 18 22:14:04 2038Mon Jan 18 22:14:05 2038Mon Jan 18 22:14:06 2038Mon Jan 18 22:14:07 2038So far, the few operating systems that I haven't found susceptible to the 2038 bug include very new versions of Unix and Linux ported to 64-bit platforms. Recent versions of QNX seems to take the temporal transition in stride. If you'd like to try this 2038 test yourself on whatever operating systems and platforms you have handy, download the Perl source code here. A gcc-compatible ANSI C work-alike version is available here. A Python work-alike version is available here. Feel free to email your output to me for inclusion on a future revision of this Web page. I have collected many reader-submitted sample outputs from various platforms and operating systems and posted them here.

For a recent relevant example of the wide-spread and far-reaching extent of the 2038 problem, consider the Mars rover Opportunity that had a software crash which resulted in it "phoning home" while reporting the year as 2038 (see paragraph under heading "Condition Red").

[root@alouette root]# hwclock --set --date="1/18/2038 22:14:07"

RTC_SET_TIME: Invalid argument

ioctl() to /dev/rtc to set the time failed.

[root@alouette root]# hwclock --set --date="1/18/2038 22:14:08"

date: invalid date `1/18/2038 22:14:08'

The date command issued by hwclock returned unexpected results.

The command was:

date --date="1/18/2038 22:14:08" +seconds-into-epoch=%s

(t1 + t2)/2. It should be quite obvious that this calculation fails when the time values pass 30 bits. The exact day can be calculated by making a small Unix C program, as follows:echo 'long q=(1UL<<30);int main(){return puts(asctime(localtime(&q)));};' > x.c && cc x.c && ./a.out(t1 + t2)/2 with (((long long) t1 + t2) / 2)(POSIX/SuS) or (((double) t1 + t2) / 2) (ANSI). (Note that using t1/2 + t2/2 gives a roundoff error.)What can I do about it?

If you are a programmer or a systems integrator who needs to know what you can do to fix this problem, here is a checklist of my suggestions (which come with no warranty or guarantee):

How is the 2038 problem related to the John Titor story?

If you are not familiar with the name John Titor, I recommend you browse the site JohnTitor.com. I understand that my site's URL appears quoted a number of times in the discussion of this apparently transtemporal Internet celebrity. I don't know John Titor, and I have never chatted with anyone on the Internet purporting to be John Titor. The stories have not convinced me so far that he has traveled from another time. I also have some technical issues with his rather offhanded mention of the 2038 problem. Furthermore, John Titor has conveniently returned to his time stream and can not answer email on the subject. Having said that, I think it fair to say that I find John Titor's political commentary insightful and thought-provoking, and I consider him a performance artist par excellence.

Sources: The Project 2038

Posted in

News

Posted in

News

New Trick to Download from RapidShare with Free Account

1/10/2010 10:15:00 PM

1/10/2010 10:15:00 PM

Ee Blog

Ee Blog

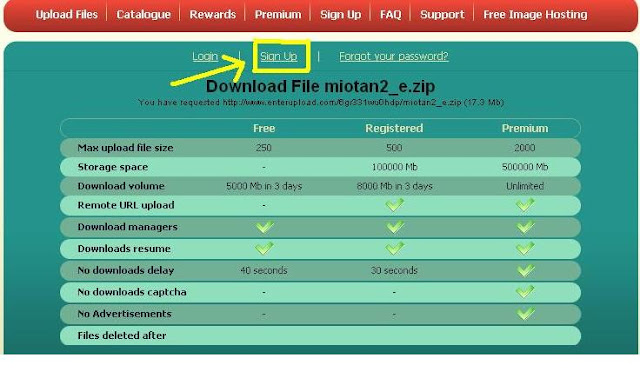

Now I will explain about how to download without using the software, fast, and can resume via RapidShare using account "FREE". I got this from one posting on KASKUS. Here's how.

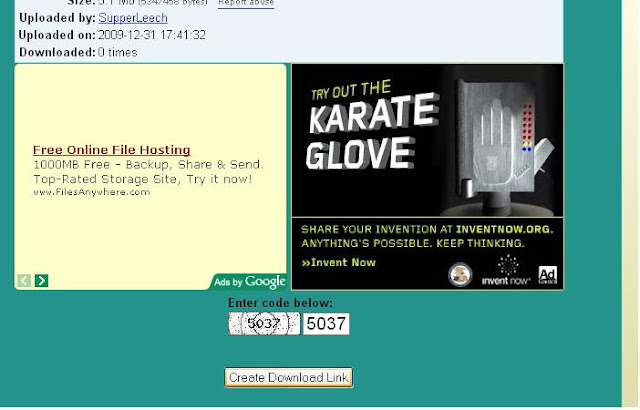

Step 1:

Sign Up in here:

http://www.enterupload.com/

Step 2:

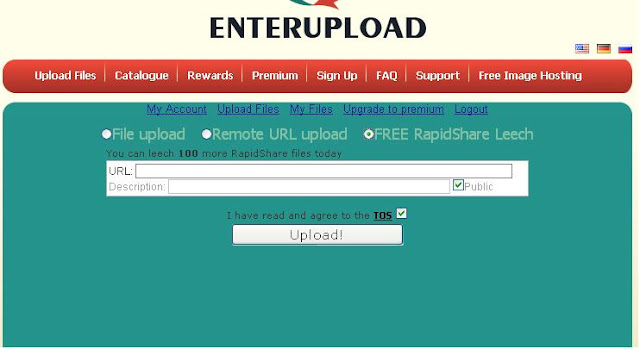

Login, and than click "Upload files", then click "FREE RapidShare LEECH" after the "Insert Links to your RapidShare download" then click "UPLOAD".

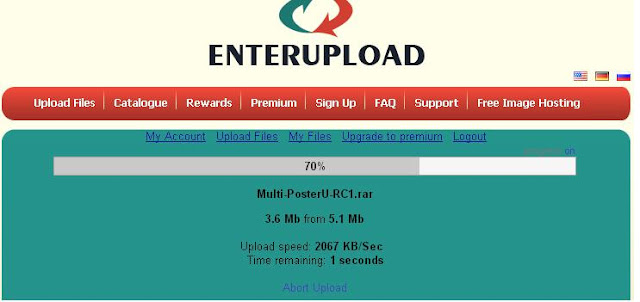

Wait until the upload is complete ...

Step 3:

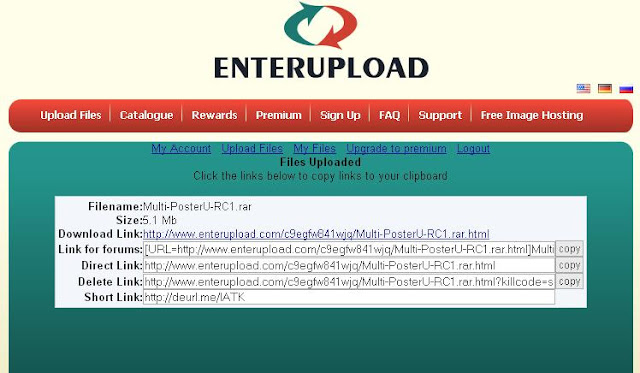

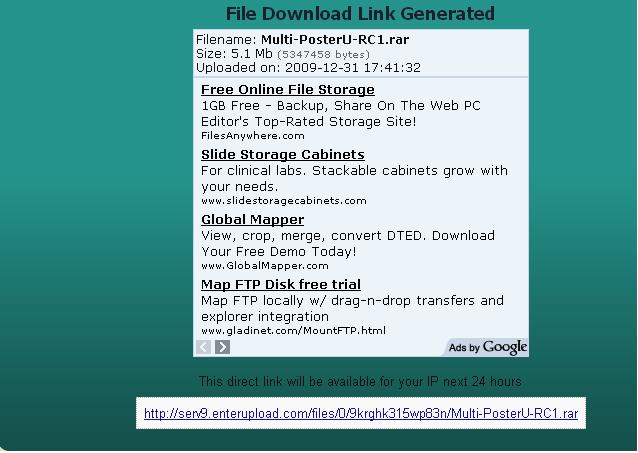

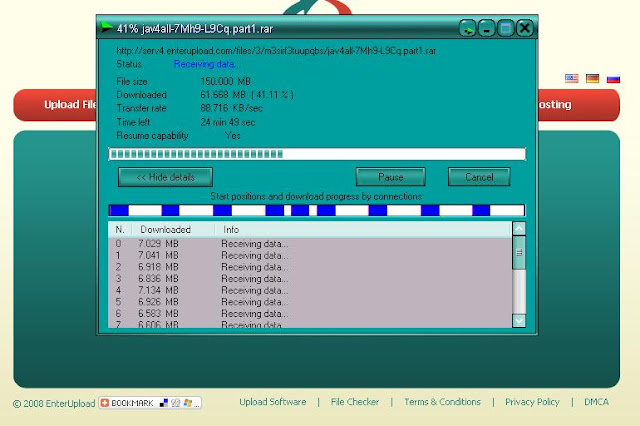

When finished, there was Direct download link. Click the link already provided, you can download the Software Download Manager like Internet Download Manager (IDM).

Step 4:

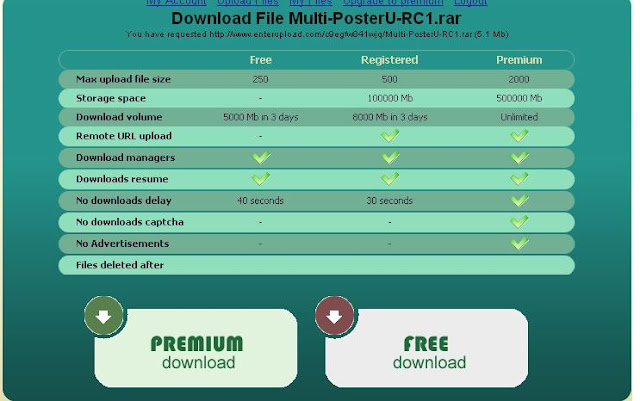

Then click "FREE DOWNLOAD"

Step 5:

Enter the captcha code was

there is a message "This direct link will be available for your IP next 24 hours" you ignore this message only.

Step 6:

You can just download it with IDM (fast and could be in the resume). Done.

Tips:

Download maximum 20 times in 24 hours. If you want to download again, please clear your browser cookies and use another IP addres pake way, such as proxy, autohide IP, or the other. Or pake this way can, Log Out > Turn off your internet connection temporarily (briefly pull the cord), Clear your browser cookies, then re-list a different email use (do not ask for verification so the contents of any email only) + other users and passwords, start downloading again as much as possible.

Posted in

Tips and Tricks

Posted in

Tips and Tricks

Complete SEO for blogs and sites

12/10/2009 08:13:00 PM

12/10/2009 08:13:00 PM

Ee Blog

Ee Blog

SEO which you need to do after you download the template and upload it to your blog:

after uploading your template sucessfully, remain on the same Dashboard>Layout>Edit HTML page and analyze the template code. Find the META tags which are providing in the beginning lines of the code. META tags start with 'meta'. Do the following steps:

- Provide your Blog Description in Meta Description tag by removing "Your Blog description..."

- Provide words relevant to your blog in the Meta Keywords tag by removing "Your keywords..."

- Provide yourname/company in the Meta authors tag by removing "Yourname/company"

The second step is submit your blog/site to 3 major search engines [Google | Yahoo | Bing] to get indexed and get traffic. You can do this by submitting only index/main page of your site using their submission links below:

That`s it!

If you need to customize and search engine optimize your blog/site deeply, you can follow my my complete Customization and SEO guide provided below. You need some HTML, XML and CSS skills to work it out.

COMPLETE CUSTOMIZATION AND SEO GUIDE FOR BLOG/SITE

HTML size

Page size matters because search engines limit size of a cached page. For example, Google will only cache a full page if the size of its HTML is less than 101 Kb (images and external scripts are not included). Yahoo! caches text of up to 500 Kb per page. This means if your HTML page is too large, search engines will not cache the full page, and only the top part of the text will be searchable.

Same color text and background

If the color of the text on a page is close to the background color, the text becomes almost invisible. As a rule, this technique is employed to populate a page with keywords without damaging its design. Since it is considered as spam by most search engines, I suggest that you do not try it.

Tiny text

If a page uses Cascading Style Sheets and there are fonts smaller than 4 pixels, they are reported as tiny texts. Most search engines consider tiny texts as an abusive practice - this is why you should avoid using them.

Immediate keyword repeats

The same keyword repeated one after the other a few times, for example air tickets on-line, air tickets, air tickets, air tickets, air tickets in Hong Kong is a questionable trick. For this example, there will be three repetitions reported, because the keyword was placed three times in a row after it was used first. Such repetitions are considered as spam by most search engines.

Controls

Try to avoid too many controls on your page, especially in the top area, since it may decrease your keyword prominence and result in low rankings.

Frames

Not all search engines support frames, i.e. can follow from a frameset page to content frames and index texts. If your website consists of frames, and you cannot redesign it, you can solve this problem by putting the content of an optimized page with links to other pages into a "NOFRAMES" HTML tag.

External and Internal JavaScript

Do not use too many embedded scripts on the page, because your keyword prominence will be reduced, and thus your page will be ranked lower on search engines. We advise putting the script in an external file or move it as close to the closing Body tag as possible.

External and Internal VBScript

Please note that excessive use of scripts in the top area of the page dilute keyword prominence and therefore affect your rankings. Put the script in an external file or move it as close to the closing Body tag as possible.

File robots.txt allows spidering

Robots.txt is a text file placed in the root directory of a website/blog to tell robots on how to spider the website. Only robots that comply with the Robots Exclusion Standard will read and obey the commands in this file. Robots.txt is often used to prevent robots from visiting some pages and subdirectories not intended for public use. However, if you want search engine robots to spider your site, there should not be disallowing commands included within this file for all or particular search engine robots.

Head area

Each HTML document should have a HEAD tag at the beginning of each document. The information contained inside the head tag (...) describes the document, but it doesn't show up on the page returned to the browser. The Title tag and meta tags are found inside the Head tag.

An HTML tag within the Head tag is used to define the title of a Web page. The content of the Title tag is displayed by browsers on the Title bar located at the top of the browser window. Search engines use the Title tag to provide a link to the site matching the user's query. The text in the Title tag is one of the most important factors influencing search engine ranking algorithms. By populating your most important keywords in the Title tag, you dramatically increase the search engine ranking of the page for those keywords. Your title tag should be the first tag in the HEAD tag.

Stop Words

To save space and speed up searching, some search engines exclude common words from their index, therefore these words are ignored when searches are carried out.

'The', 'or', 'in', 'it' are examples of such words. These words are known as "stop words." To make your pages search engine-friendly, you should avoid using stop words in the most important areas of your page like title, meta tags, headings, alternative image attributes, anchor names, etc.

Besides, stop words have no contextual meaning - using them in short areas such as a title, headings, and anchor texts will reduce weight, prominence and the frequency of keywords.

Keyword frequency

Frequency is the number of times your keyword is used in the analyzed area of the page.

Example: If the page's first heading is 'Get the best XYZ services provided by XYZ Company', frequency of keyword 'XYZ' in the heading will be two. Frequency relates only to the exact matches of a keyword. Therefore, frequency of key phrase 'XYZ services' will be one, because as exact match, this keyword is used only once.

Search engines use frequency as a measure of keyword importance.

Search engines rate pages with more keywords as more relevant results, and score them higher. However, you should not use too many keywords, since most search engines will penalize you for this practice for being seen as an attempt to artificially inflate rankings.

Keyword weight (density)

Keyword weight is a measure of how often a keyword is found in a specific area of the Web page like a title, heading, anchor name, visible text, etc. Unlike keyword frequency, which is just a count, keyword weight is a ratio.

Keyword weight will depend on the type of keyword, that is if the keyword is a single word or phrase. If the keyword includes two or more words, for example, 'XYZ services', every word in the key phrase (i.e. both 'XYZ' and 'services') contributes to the weight ratio in the weight formula, and not as one keyword ('XYZ services').

Keyword weight is calculated as the number of words in the key phrase multiplied by frequency and divided by the total number of words (including the keyword).

Example: The title of a Web page is 'Get Best XYZ Services'. Keyword weight for 'XYZ services' is 2*1/4*100%=50%. If you reduce the number of words in the title by removing the word 'get', so the title becomes 'Best XYZ Services', than the keyword weight will be larger: 2*1/3*100%=67%. Finally, if you only keep 'XYZ Services' in the title, the keyword weight will become 100% -- 2*1/2*100%.

So, to increase the keyword weight, you should either add some more keywords or reduce the number of words in the page area. The proportion of the keywords to all words will become larger, so will the keyword weight.

Many search engines calculate keyword weight when they rank pages for a particular keyword. Normally, high keyword weight tell search engines that the keyword is extremely important in the text; however, a weight that is too high can make search engines suspect you of spamming and they will penalize your website's rankings.

Keyword Prominence

Keyword Prominence is another measure of keyword importance that relates to the proximity of a keyword to the beginning of the analyzed page area. Being the keyword that is used at the beginning of the Title, Heading, or on top of the visible text of the page is considered more important than other words. Prominence is a ratio that is calculated separately for each important page area such as a title, headings, visible text, anchor tags, etc.

HTML pages are written in a document-like fashion. The most important items of a document's visible text are placed at the top, and their importance is gradually reduced towards the bottom. This idea can be also applied to keyword prominence. Normally, the closer a keyword to the top of a page and to the beginning of a sentence, the higher its prominence is. However, search engines also check if the keyword is present in the middle and at the bottom of the page, so you should place some keywords there too.

The prominence formula takes the following factors into account:

1) Keyword positions in the area,

2) Number of words in the keyword, and

3) Total number of words in the area.

100% prominence is given to a keyword or keyphrase that appears at the beginning of the analyzed page area.

Example 1: Let's take the page title 'Daily horoscopes on your desktop' and analyze prominence of keyphrase 'daily horoscopes'. The title word order will be: 'Keyword1, keyword2, word3, word4, word5'. Prominence will be 100% here as the keyphrase is present at the beginning of the sentence.

The keyword/keyphrase in the middle of the analyzed area will have 50% prominence.

Example 2: The anchor name is 'Find here the daily horoscope for your sign'. The keyword prominence of the phrase 'daily horoscope' in this case will be 50% as the keyphrase is located in the middle of the sentence -- 'Word1, word2, word3, keyword4, keyword5, word6, word7, word8'.

As a keyword appears farther back in the area, its prominence will be counted from zero and it will depend on how close to the end it is. If the keyword appears at the end of the area, its prominence will be close to 0%. If the keyword appears at the beginning of the area and then is repeated in the middle or at the end, its prominence will be 100% because prominence of the fist used keyword prevails over the repeated keywords.

META Description

Syntax: < META name="Description" content="Web page description">

This is a Meta tag that provides a brief description of a Web page. It is important the description clearly describes the purpose of the page. The importance of the Description tag as an element of the ranking algorithm has decreased significantly over years, but there are still search engines that support this tag. They log descriptions of the indexed pages and often display them with the Title in their results.

The length of a displayed description varies per search engine. Therefore you should place the most important keywords at the beginning of the first sentence -- this will guarantee that both users and search engines will see the most important information about your site.

META Keywords

Syntax: < META name="Keywords" content="keyword1, keyword2, keyword3">

This is Meta tag that lists the words or phrases about the contents of the Web page. This tag provides some additional text for crawler-based search engines. However because of frequent attempts to abuse their system, most search engines ignore this tag. Please note that none of the major crawler-based search engines except Inktomi provide support for the Keywords Meta tag.

Similar to the description tag, there is a limit in the number of captured characters in Keywords meta tag. Ensure you've chosen keywords that are relevant to the content of your site. Avoid repetitions as search engines can penalize your rankings. Move the most important keywords to the beginning to increase their prominence.

META Refresh

Syntax: < META http-equiv="refresh" content="0;url=http://newURL.com/">

This HTML META tag also belongs in the Head tag of your HTML page.

The META Refresh tag is often used as a way to redirect the viewer to another Web page or refresh the content of the viewed page after a specified number of seconds. The META Refresh tag is also sometimes used as a doorway page optimized for a certain search engine, which is accessed first by users, who then are redirected to the main website. Some search engines discourage the use of this META tag, because it is an opportunity for webmasters to spam search engines with similar pages that all lead to the same page. In addition, this also clutters the search engines databases with irrelevant and multiple versions of the same data. Try to avoid doorways and redirects altogether in your Web/blog building.

META Robots

Syntax: < META name="Robots" content="INDEX,FOLLOW">

The robots instructions are normally placed in a robots.txt file that is uploaded to the root directory of a domain. However, if a webmaster does not have access to /robots.txt, then instructions can be placed in the Robots META tag. This tag tells the search engine robots whether a page should be indexed and included in the search engine database and its links followed.

The content of the robots meta tag is a comma separated list that may contain the following commands:

ALL also INDEX,FOLLOW -- there are no restrictions on indexing the page or following links; NONE also NOINDEX,NOFOLLOW -- robots must ignore the page; a combination of INDEX, FOLLOW, NOINDEX, NOFOLLOW -- if you want a search engine robot just to index a page but not to follow links, you should specify 'INDEX,NOFOLLOW', if you want it to follow links without indexing the page, you should instruct robots as 'NOINDEX,FOLLOW'.

BODY area

The body tag indentifies the beginning of the main section of your Web page, the main content area. The whole of the Web page is designed between the opening and closing body tag. (...) including all images, links, text, headings, paragraphs, and forms.

The recommendations on how to use keywords in the BODY tag are the same as in other important areas. Your primary keywords should be placed at the top of your body tag (first paragraph) and as close to the beginning of a sentence as possible. Do not forget to use them again in each paragraph. Keywords should not be repeated one after another. For search engines that check keyword presence at the bottom of the body tag, you should use your most important keywords within the last paragraph from the closing body tag.

Visible text

The content of the Body tag includes both visible and invisible text. The term 'Visible text' refers to the portion that is displayed by the browser.

Extra emphasis by search engines is put on keywords when you underline them or make them bold, thus helping higher rankings for these keywords.

Keyword in the Heading

It is important the keyword is present in the very first heading tag on the page regardless of its type. If the keyword is also used as a first word, you will raise its prominence.

All headings

There are standard rules for the structure of HTML pages. They are written in a document-like fashion. In a document, you start with the title, then a major heading that usually describes the main purpose of the section. Subheadings highlight the key points of each subsection. Many search engines rank the words found in headings higher than the words found in the text of the document. Some search engines incorporate keywords by looking at all the heading tags on a page.

Links

Anchor tags on the page can also have keyword-rich text as anchor names. This text can be important to some search engines and therefore also for the rankings of the destination pages. Create anchored links with keywords in them to link pages of your website.

Text in links including ALTs

Images like buttons, banners, etc. may include Alt attributes as a text comment describing the graphic image. If this image has been used as a hyperlink, the Alt attribute is interpreted as a link text by some search engines, and the destination page will have a significant boost in rankings for the keyword in the Alt attribute. Use graphic links with keyword-rich Alts to link pages of your website.

ALT image attributes

Optimization of Alt image attributes gives you another opportunity to use keywords. It is advantageous if the page is designed with large graphics and very little text. Include the target keyword in at least the first three Alt attributes.

Comments

This tag lets webmasters write notes about the page code, which is only for their guidance and is invisible to the browser. Most search engines do not read the content of this tag, so Comments optimization will not be as helpful as Title optimization. The Comment tags should be populated with keywords only if the design of the Web page does not allow more efficient and search engine-friendly methods.

Keyword in URL

Having keyword in your domain name and / or folder names and file names increases your chances to gain top positions for these keywords. If you aren't a brand-oriented business, it is recommended that you purchase the domain name that contains your keyword. If your keyphrase consists of more than one keyword, the best way to separate them in the URL is with a hyphen "-":

www.my-keyphrase-here.com

If it seems impossible to get such a domain name, or your site is already well established over a keyword-poor domain, attempt to compensate it by using keywords in folder and file names of your site's file system on the server.

Link popularity

This is the number of links from other website pages to your page that search engines are aware of.

Each search engine only lists links embedded on the sites that are preindexed by that particular search engine. So, the presence of certain links in Google's index will not guarantee that Inktomi has also indexed the same sites. Therefore the number of links shown will be different from engine to engine.

In general, the more links that point to your page, the better your page will rank.

However, a large number of links is not the deciding factor that helps your site get to the top of the results pages -- the quality of those links is of greater importance. If a link to your site is placed on a page having very little importance that is this page itself is linked to only a few other pages or none, this kind of link will not improve a page's popularity. The links to your pages should be subject-relevant because theme-based search engines will check the parity of content between referring and referred pages. The closer they are, the more relevant your site page is to the searcher's query for your keyword. Avoid reciprocal linking with sites that have a low weight, or a questionable reputation or are different from yours in subject matter. As a part of their anti-spam measures, search engines can penalize your site's rankings for ignoring these pitfalls.

Theme

For spam-free and relevant results, search engines start evaluating sites as one page to find the main theme covering all pages of the site. Most major search engines have become theme-based.

Search engines extract and analyze words on all pages of a website to discover its theme. The more keywords found on your website that relate to the user's query, the more points you get for the theme. Therefore, if your Web business includes many products or services, try to find the theme that covers them all.

Open Directory Project listing (dmoz.org)

The ODP (also known as DMOZ) is the largest human-edited directory on the Web. Many major search engines use the ODP data to provide their directory results. This works because sites put forward for inclusion in the ODP are reviewed by real people who care about the quality of their directory.

It is still a good for a website to be present in the ODP. For new sites, it is an excellent starting point, because Google regularly spiders the ODP to update its own directory based on the ODP listings, and if your site is included, you'll get a link that Google believes important enough to start off crawling your site.

As well as the weight of a link from the ODP, it would be even better if the site were listed in the most topic-specific category to make the link not only important, but also content-relevant.

Yahoo! Directory listing

This is similar to the ODP -- Google relationship. The Yahoo! directory is regularly crawled by the Yahoo! robots.

A new site has a greater chance of being included faster in the Yahoo! search engine if there is a link to this site from the Yahoo! directory. If you get your site is listed within the Yahoo! category closest to your site theme, this particular link will help your site move up.

Search Engine Bot

Search engine bot is a type of web crawlers which collects web documents to generate and maintain index for the search engines.

Google PageRank

Google PageRank is the measure of a page’s importance in Google’s opinion. PR calculations are based on how many quality and relevant sites across the Web link to this page. The higher the PageRank of the referring page, the more weight this link has.

Alexa Traffic Rank

Alexa Traffic Rank is a combined measure of page views and users (reach). This information is gathered with the help of Alexa Toolbar used by millions of Web surfers. First, Alexa calculates the reach and number of page views for all sites on the Web on the daily basis. Then these two quantities are averaged over time.

Backlinks Theme

To determine your site rankings, search engines take into consideration theme relevance of those sites linking to you. If the linking sites have something in common with yours (keywords in the BODY, titles, descriptions of the linked pages, etc.), your website gets better chances to gain high positions for these keywords.

PR Statistics for linking sites

PR Statistics for linking sites is statistic information about the Page Rank of the pages linking to you. Statistics are presented both in numerical and percentage terms. The higher the PR of the referring site, the better chances your own Web page has to get high PR.

Posted in

News,

Tips and Tricks

Posted in

News,

Tips and Tricks

Popular Posts

-

I'm sure lots of you use the well-known Facebook, and I'm sure many of you are familiar with their new little chat system they ha...

-

Credits to: ReTsEhC0401 Simple steps: Log in your FB account. Click this REWARD LINK : HERE After clicking it... (You should s...

-

We give you the opportunity to advertise on this blog with a fairly affordable price, namely: 1. $5 USD/ month for banner ad size of 125x1...

-

Now, This is a new Coin Hack. It's very working, like a brush coin cheat. Name is changed "Manito Coin" , this cheat sumbit by...

-

Kamen Rider V3 airing from 1973 to 1974, the series ran for 52 episodes and followed Shiro Kazami on his quest to gain revenge against t...